Understanding the force that’s reshaping enterprise architecture in the age of artificial intelligence In 2010, Dave McCrory, a GE Digital engineer, coined a term that would prove prophetic: “Data Gravity.”…

Understanding the force that’s reshaping enterprise architecture in the age of artificial intelligence

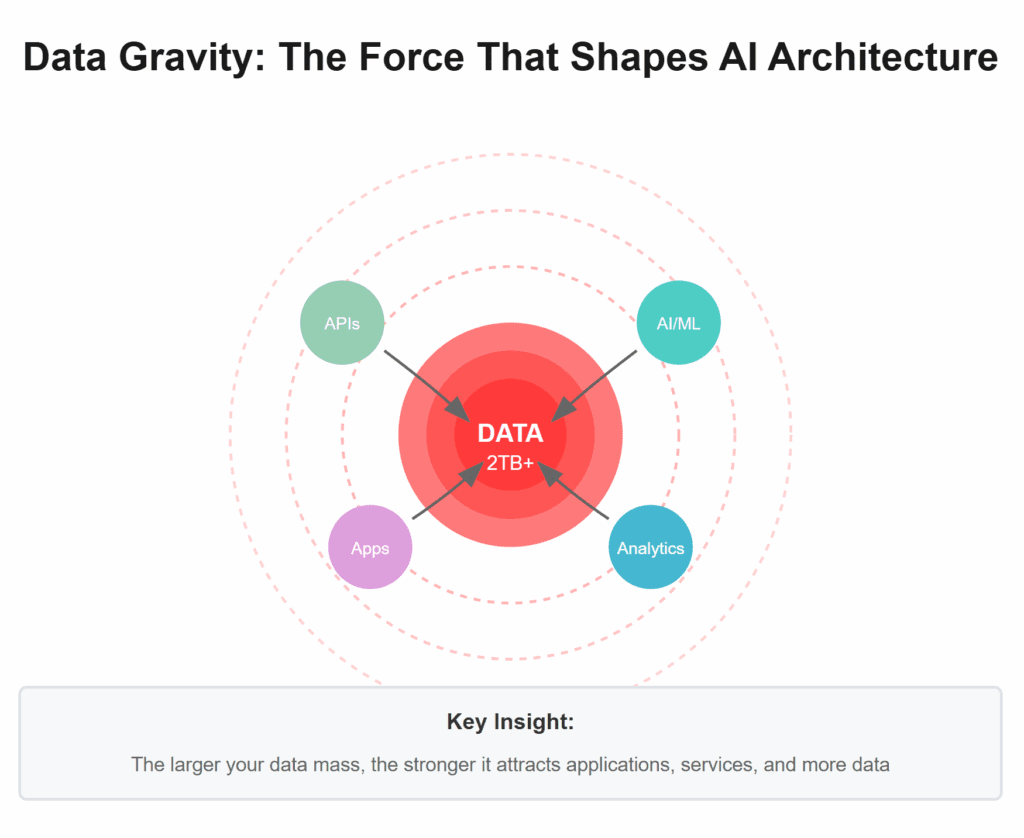

In 2010, Dave McCrory, a GE Digital engineer, coined a term that would prove prophetic: “Data Gravity.” Like physical gravity, data attracts applications, services, and more data. The larger the mass of data, the stronger it pulls. And just like celestial bodies, moving large data masses requires tremendous energy and cost.

At the time, McCrory was trying to explain why applications tend to move toward data rather than vice versa. A decade later, his metaphor has become the defining force of enterprise AI architecture. Understanding data gravity isn’t just useful, it’s essential for navigating the world of Enterprise AI.

Table of contents

How Data Gravity Actually Works

Imagine your Salesforce instance after 10 years of use. It contains millions of customer records, billions of activity logs, and countless custom objects capturing your unique business processes. This isn’t just data. It is your institutional memory encoded in digital form.

Now consider what happens when you want to build an AI-powered customer service bot:

Option 1: Move the Data

- Export all customer history to an external AI platform

- Deal with rate limits (now 1 request/minute for Slack, remember?)

- Pay egress fees (AWS charges $0.09/GB after the first 100GB)

- Maintain synchronization as new data arrives

- Handle security, compliance, and privacy across systems

Option 2: Move the Compute

- Use Salesforce’s native Agentforce AI

- Access data at memory speed, not network speed

- No synchronization delays or consistency issues

- Security and compliance maintained within one perimeter

- AI improves automatically as your data grows

The physics are clear: it’s far easier to bring compute to data than data to compute. This is data gravity in action.

Why AI Makes Data Gravity Exponentially Stronger

Traditional applications could function with subsets of data or simplified extracts. A reporting tool might only need monthly aggregates. An application integration might only pass specific fields. But Enterprise AI is different. It is voraciously, fundamentally different than traditional ETL extracts.

AI’s Insatiable Data Appetite

Large Language Models and machine learning systems improve with more data, not linearly, but following power law curves. The difference between training on 1 million customer interactions versus 10 million isn’t 10x better results; it’s often the difference between a useless bot and one that seems almost human.

This creates what we call the “AI Data Paradox”: The more valuable your AI becomes, the more data it needs. The more data it consumes, the harder it becomes to move that data anywhere else. The harder it becomes to move, the more locked-in you become to the platform hosting it.

The Compound Effect

Data gravity compounds in three ways:

- Volume: Every API call, every user action, every system event adds mass

- Velocity: Real-time AI needs real-time data, making batch exports obsolete

- Value: AI-generated insights become new data, adding to the gravitational mass

The Strategic Implications for Your Organization

Understanding data gravity fundamentally changes how you evaluate platform decisions:

The Hidden Cost of “Best-of-Breed”

That beautiful composable architecture with specialized tools for every function? Each requires data replication. In a world of unrestricted APIs, this was manageable. In the new economics of platform-specific AI, it’s becoming economically and technically unviable.

Consider a typical enterprise with:

- CRM in Salesforce (2TB of customer data)

- Communications in Slack (500GB of messages)

- Marketing data in Marketo (3TB of interactions)

- Documents in SharePoint (5TB of files)

- Analytics in Tableau (connecting to all the above)

To build a unified AI assistant, you’d need to constantly synchronize all this data to a central location. With platform API restrictions, you’re looking at months just for initial sync, ongoing costs in the tens of thousands monthly, and a complex architecture that breaks whenever any platform changes its rules.

The Platform Lock-in Multiplier

Data gravity doesn’t just make it hard to leave a platform: it makes it exponentially harder over time. Every day you use Slack AI instead of building your own, Slack’s AI gets smarter with your data while your options for alternatives diminish. It is a compound interest effect, but for vendor lock-in.

The Warehouse Workaround

The only effective counter to platform data gravity is establishing your own gravitational center: a data warehouse or lakehouse. By systematically replicating data from all platforms to a neutral location, you maintain some freedom of movement. But this requires:

- Significant infrastructure investment

- Accepting security and governance Risks

- Dedicated data engineering resources

- Accepting some data latency

- Building your own AI capabilities

AI-Driven Data Gravity in Action: Real-World Examples

Microsoft’s Masterclass

Microsoft understands data gravity perfectly. They’re not trying to move your data out of Teams or SharePoint. Instead, they’re bringing AI (Copilot) to where your data already lives. Then they charge competitors $0.00075 per message to access that same data. It’s brilliant and diabolical.

Slack’s Gambit

By restricting Slack’s APIs while launching Slack AI, Slack ensures that the 500GB of institutional knowledge in your Slack workspace becomes a moat for their AI offerings. They’re not just protecting data—they’re weaponizing its gravity.

The Databricks Exception

Snowflake and Databricks succeed precisely because they understand data gravity. They position themselves as neutral gravitational centers where data from multiple platforms can coexist. Their value proposition is becoming the Switzerland of your data landscape.

Working With Gravity, Not Against It

Smart IT leaders recognize that fighting data gravity is like fighting physics—expensive and ultimately futile. Instead:

- Choose Your Primary Gravitational Centers Wisely: Pick 2-3 platforms that will host your most critical data masses. These become your AI platforms by default.

- Maintain Secondary Centers: Invest in a neutral data platform (warehouse/lakehouse) as your backup gravitational center. This is your insurance policy against platform abuse.

- Document Data Mass Distribution: Map where your critical data lives and how much it would cost (in time, money, and risk) to move it. This becomes crucial for strategic planning.

- Plan for Gravitational Shifts: Today’s data gravity center might not be tomorrow’s winners. Build architectures that acknowledge large data masses while maintaining some mobility for smaller satellites.

The Future: Distributed Gravity or Black Holes?

The optimistic view suggests we’ll develop technologies to work with distributed data gravity—federated learning, privacy-preserving AI, edge computing that brings AI to data wherever it lives.

The pessimistic view sees platform black holes—data masses so large that nothing, not even light (or APIs), can escape. Once your enterprise data reaches critical mass within a platform, escape velocity becomes impossible to achieve.

The realistic view acknowledges both possibilities and plans accordingly. Data gravity is a force of nature in the digital universe. Like physical gravity, it can trap you or, properly harnessed, power your journey to new heights. The key is understanding the physics of data before plotting your course.

For IT leaders navigating Enterprise AI, the message is clear: respect data gravity, use it strategically, and always maintain enough thrust to avoid getting trapped in someone else’s orbit. Your architectural decisions today determine whether data gravity becomes your engine or your anchor tomorrow.